NetCDF is a multidimensional scientific data format especially useful for the representation of complex spatial information (x, y) that also varies in depth (z) or time (t). NetCDFs are leveraged within the ArcGIS platform for superb multidimensional analysis or slick 3D visualizations.

Most of the time, we already have ready-to-use netCDF files, but we can also create netCDF files from any multidimensional data set. In this blog, we’ll show you how to create a netCDF file on python from common formats such as: (1) point shapefile, (2) tiff raster, and (3) comma-separated files.

Before we begin

NetCDF is a powerful data format, but it requires some prior knowledge on how its structure works. You can review some basic concepts on what is netCDF data, fundamentals of netCDF data storage, or accessing multidimensional scientific data using python. Additionally, in this blog we’re following the Climate and Forecast (CF) convention as a standard for the description of attributes, names of variables, and units. You can use the CF convention guidelines as a reference for the documentation of netCDF files.

Sample data

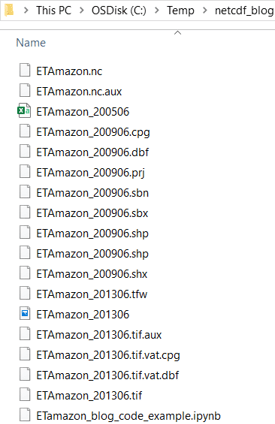

For this exercise, we’ll use a subset of monthly evapotranspiration (ET) from ET-Amazon for the state of Rondônia in Brazil. This region has been subject to deforestation, observed as a decrease in ET. The ET-Amazon data set has reports ET (mm/month) in a three-dimensional space: x, y, and t.

After you downloaded the ETAmazon_subset.zip file, extract its contents into your local computer to the C:\temp\netcdf_blog directory.

Load libraries and create empty netCDF file

In python, load the required libraries using the import statement. Assign the directory of the extracted data to the data_path variable. Use the netCDF4.Dataset function to create an empty netCDF file. Remember to use the the *.nc extension in the name of the file and the ‘w’ option to be able to write to the netCDF file.

import netCDF4

import os

import datetime as dt

import numpy as np

import arcpy

import csv

# Data path

data_path = r'C:\temp\netcdf_blog'

# Create NetCDF File

output_nc = os.path.join(data_path, 'ETAmazon_20200406.nc')

nc = netCDF4.Dataset(output_nc, 'w')Global attributes

Global attributes are key to describe the netCDF to other collaborators or users. Remember to review the CF convention for a complete list of required and suggested attributes.

# Global attributes

nc.title = 'ET-Amazon'

nc.summary = ('Actual monthly evapotranspiration in Rondonia, Brazil '

'for June 2005, 2009, and 2013')

nc.keywords = 'Evapotranspiration, Amazon, Water cycle'

nc.license = ('This work is licensed under a Creative Commons '

'Attribution 4.0 International License.')

nc.references = ('Paca, V.H., Espinoza-Davalos, G.E., Hessels, T.M., '

'Moreira, D., Comair, G.F., Bastiaanssen, W. (2019). '

'The Spatial Variability of Actual Evapotranspiration '

'Across the Amazon River Basin Based on Remote Sensing '

'Models Validated with Flux-Towers. Ecological Processes. '

'8(1), 6. https://doi.org/10.1186/s13717-019-0158-8')

nc.source = 'https://www.hydroshare.org/resource/24792a48a6394dcba52da62fa324ae40/'

nc.Conventions = 'CF-1.6'

nc.institution = 'Esri'

nc.history = '{0} creation of ET-Amazon netcdf file.'.format(

dt.datetime.now().strftime("%Y-%m-%d")

)Create dimensions

Create three dimensions: one for longitude (x), one for latitude (y) and one for time (t). In the sample data, we have values for 176 rows (y), 244 columns (x), and 3 months (t).

# Create dimensions

lat_dim = nc.createDimension('latitude', 176)

lon_dim = nc.createDimension('longitude', 244)

tim_dim = nc.createDimension('time', 3)Create variables

Create five variables: one variable per dimension (longitude, latitude, and time), one variable for the mapping grid (crs), and one variable for the evapotranspiration values (ET). Notice that each variable have associated dimensions to them. The longitude, latitude, and time variables have only their own dimension associated to each one of them. The mapping grid (crs) has zero dimensions, and ET has three dimensions (in order: time, latitude, and longitude) for a total of 176 x 244 x 3 = 128,832 data values.

# Create variables

lat_var = nc.createVariable('latitude', np.float64, ('latitude'))

lat_var.units = 'degrees_north'

lat_var.standard_name = 'latitude'

lat_var.axis = 'Y'

lon_var = nc.createVariable('longitude', np.float64, ('longitude'))

lon_var.units = 'degrees_east'

lon_var.standard_name = 'longitude'

lon_var.axis = 'X'

time_var = nc.createVariable('time', np.int32, ('time'))

time_var.standard_name = 'time'

time_var.calendar = 'gregorian'

time_var.time_step = 'Monthly'

time_var.units = 'Seconds since 1970-01-01 00:00:00'

time_var.axis = 'T'

crs_var = nc.createVariable('crs', np.int8, ())

crs_var.standard_name = 'crs'

crs_var.grid_mapping_name = 'latitude_longitude'

crs_var.crs_wkt = ("GEOGCS['GCS_WGS_1984',DATUM['D_WGS_1984',"

"SPHEROID['WGS_1984',6378137.0,298.257223563]],"

"PRIMEM['Greenwich',0.0],"

"UNIT['Degree',0.0174532925199433]]")

et_var = nc.createVariable('ET', np.int16, ('time', 'latitude', 'longitude'),

fill_value=9999)

et_var.units = 'mm/month'

et_var.long_name = 'Actual Evapotranspiration'

et_var.short_name = 'ET'

et_var.grid_mapping = 'crs'Load values

Let’s load the values for the variables of time, latitude, longitude, and evapotranspiration.

Time

Use the datetime library to calculate the seconds since epoch (01/01/1970). Store the values in a list and store them in the times_values variable.

# Load values: time

date_200506 = int((dt.datetime(2005,6,1) - dt.datetime(1970,1,1)).total_seconds())

date_200906 = int((dt.datetime(2009,6,1) - dt.datetime(1970,1,1)).total_seconds())

date_201306 = int((dt.datetime(2013,6,1) - dt.datetime(1970,1,1)).total_seconds())

time_values = [date_200506, date_200906, date_201306]

time_var[:] = time_valuesLatitude and Longitude

Create two lists using np.arange for the latitudes and longitudes of the center points of the grid cells. The grid cells are spaced 0.0025 degrees. Add or subtract half-cell size (i.e. 0.0025/2) to the starting point to get the coordinates at the middle instead of the edge of the grid cells.

# Load values: latitude and longitude

lat_values = np.arange(-9.6491667 - 0.0025/2, -10.0891667, -0.0025)

lon_values = np.arange(-64.735 + 0.0025/2, -64.125, 0.0025)

lat_var[:] = lat_values

lon_var[:] = lon_valuesEvapotranspiration

Let’s explore three different ways to load values into the ET variables: from a raster, a point shapefile, and a csv file. In short, we load the values using python libraries like arcpy, csv, or numpy and save them into the et_var variable array.

Load values from raster

Use the read method of the arcpy.Raster object to load the values of the ETAmazon_201306.tif raster. Store the array into time third position (index=2)

# Load ET values from raster

ras_201306 = os.path.join(data_path, 'ETAmazon_201306.tif')

raslyr_201306 = arcpy.Raster(ras_201306)

array_201306 = raslyr_201306.read()

et_var[2, :, :] = array_201306[:, :, 0]Note: As an alternative workflow, we could also use the arcpy.RasterToNumPyArray function to load the values of the ETAmazon_201306.tif raster.

# Load ET values from raster

ras_201306 = os.path.join(data_path, 'ETAmazon_201306.tif')

array_201306 = arcpy.RasterToNumPyArray(ras_201306)

et_var[2, :, :] = array_201306Load values from point shapefile

Use a SearchCursor to read the values for each point in the ETAmazon_200906.shp shapefile. Get the longitude and latitude indices (i, j) by identifying the closest longitude or latitude values, that is where the absolute difference is the lowest. Store the array into time second position (index=1).

# Load ET values from point shapefile

shp_200906 = os.path.join(data_path, 'ETAmazon_200906.shp')

with arcpy.da.SearchCursor(shp_200906, ['SHAPE@X', 'SHAPE@Y', 'ET']) as cursor:

for row in cursor:

i = (np.abs(lon_values - row[0])).argmin()

j = (np.abs(lat_values - row[1])).argmin()

et_var[1, j, i] = row[2]Load values from csv file

Open the ETAmazon_200506.csv file and read the values as dictionaries, using the headers as keys. Similarly as with the point shapefile, identify the indices (m, n) of the closest latitude and longitude values. Store the array into time first position (index=0).

# Load ET values from csv

file_200806 = os.path.join(data_path, 'ETAmazon_200506.csv')

with open(file_200806, 'r') as f:

reader = csv.DictReader(f)

for line in reader:

m = (np.abs(lon_values - float(line['longitude']))).argmin()

n = (np.abs(lat_values - float(line['latitude']))).argmin()

et_var[0, n, m] = line['ET']Close netCDF file

Finally, close the nc file.

nc.close()

Summary

NetCDF is a multidimensional data format that can be used extensively in the ArcGIS platform, from 3D visualizations to multidimensional analysis of complex data sets. It is easy to create netCDF files using python, from existing data formats such as rasters, point shapefiles, or csv files. NetCDF’s are self-describing, meaning software packages can directly read the data and determine format. The structure contains the variable names and essential metadata such as units. These capabilities also lend the netCDF format to be used widely for scientific data archival. Government agencies such as NASA and NOAA use netCDF format to deliver data to end users.

More information?

Join GeoNet and ask a question to our community of experts.

References

Paca, V., Espinoza-Dávalos, G.E., Hessels, T.M. et al. The spatial variability of actual evapotranspiration across the Amazon River Basin based on remote sensing products validated with flux towers. Ecol Process 8, 6 (2019). https://doi.org/10.1186/s13717-019-0158-8

Article Discussion: