The Accuracy and Precision Revolution

What's ahead for GIS?

By Jeffery S. Nighbert, U.S. Bureau of Land Management

This article as a PDF .

|

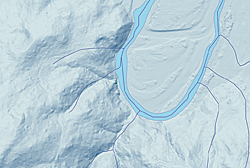

Figure 1

Typical base data displayed at 1:10,000 scale. Hillshade and contours were derived from the National Elevation Dataset, hydrography from the National Hydrography Dataset, and roads from internal files. |

The ability to obtain precise information is nothing new. With great patience and skill, mapmakers and land surveyors have long been able to create information with an impressive level of accuracy. However, today the ability to determine and view locations with submeter accuracy is now in the hands of millions of people. Commonly available high-resolution digital terrain and aerial imagery, coupled with GPS-enabled handheld devices, powerful computers, and Web technology, is changing the quality, utility, and expectations of GIS to serve society on a grand scale. This accuracy and precision revolution has raised the bar for GIS quite high. This pervasive capability will be the driver for the next iteration of GIS and the professionals who operate them.

When I say there is a "revolution" going on in GIS, I am referring to the change in the fundamental accuracy and precision kernel of commonly used geographic data brought about by new technologies previously mentioned. For many ArcGIS users, this kernel used to be about 10 meters or 40 feet at a scale of 1:24,000. With today's technologies (and those in the future), GIS will be using data with 1-meter and submeter accuracy and precision. There are probably GIS departments—in a large city or metro area—where this standard is already in place. However, this level of detail is far from the case in natural resource management agencies such as Bureau of Land Management (BLM) or the United States Forest Service. But as lidar, GPS, and high-resolution imagery begin to proliferate standard sources for "ground" locations, GIS professionals will begin to feel the consequences in three areas: data quality, analytic methods, and hardware and software.

Data Quality

As we try to integrate highly resolved data into existing GIS, the errors in legacy data will become more apparent. The expectation is that data is as accurate and precise as possible, so new geometry must be developed either through editing or by capturing new data. We will need to be more careful about documentation and mindful of appropriately mixing data in databases. The four figures accompanying this article illustrate the problems GIS professionals might encounter as they integrate more accurate data into GIS operations. For these illustrations, I used recently acquired lidar elevation data.

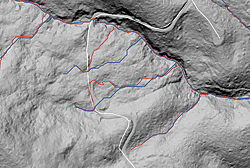

|

Figure 2

This is typical base data displayed at 1:24,000 scale. The hillshade and contours were derived from the National Elevation Dataset, hydrography from the National Hydrography Dataset, and roads from internal files. Red square indicates enlargement area for Figures 3 and 4. |

Figure 1 illustrates a typical base dataset displayed at 1:10,000 scale. Hillshade and contours have been derived from the U.S. Geological Survey National Elevation Dataset. The hydrography came from the U.S. Geological Survey National Hydrography Dataset. Roads were taken from BLM internal files. The standards of accuracy and precision of this data is typical of levels of the data used by natural resource management agencies such as the BLM and Forest Service. Most of the data used in these databases was originally derived from U.S. Geological Survey 1:24,000-scale topographic maps or from existing paper maps of lesser quality. Only in recent years has data been developed using GPS or heads-up digitizing from large-scale imagery or photography. Until recently, I considered the quality of this data pretty good since at commonly used scales ranging from 1:10,000 to 1:100,000, I could not readily detect any flaws.

Figure 2 shows hillshade and hydrography displayed at 1:24,000 scale, which is the intended scale of the data. The problem occurs when, because this is the highest resolution in the GIS, this same data is used for scales larger than 1:24,000. Note how hydrography matches the terrain (hillshade) in most areas.

A red square surrounds the magnified areas in Figures 3 and 4 that show where flaws in the data become painfully apparent. For the most part, the hydrography follows the terrain in Figure 3 at a scale of 1:2,400 (about 1-meter resolution, which is the pixel size of the bare earth lidar data).

|

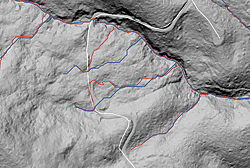

Figure 3

Hillshade and hydrography displayed at 1:24,000 scale. Note how hydrography matches terrain (hillshade) in most areas. |

|

Figure 4

Enlarged area displayed at 1:2,400 shows slight discrepancies between hydrography and terrain. |

Figure 4 uses a hillshade of the bare earth lidar returns from 1-meter lidar data. In this figure, one can see how poorly the hydrography matches the terrain at 1-meter resolution. The hydro linework does not follow the drainages very closely. There are errors of omission. Where there should be line work, there is none, and there are errors where the line work simply is wrong.

Analytic Methods

Analytic methods will need to change as we learn to use data with greater detail and intensity. Processes that might have worked at 10-meter resolution will now need modification. Figure 5 illustrates the type of problems encountered when we attempted to automate stream generation on a half-meter digital elevation model (DEM) derived from lidar. The red line represents the stream drainage that should have been generated, while the darker blue line is what the program produced. The increased accuracy and precision caused the stream delineation program to send the course of the stream along the roadbed. Special programming had to be added to make automated watercourse line generation successful. (Note the white line represents a road from the BLM database that does not follow the roadbed as indicated by the lidar information.)

Hardware and Software

High-resolution GIS data is expensive to store, use, and manage. For example, a 1-meter resolution elevation model is 100 times larger than the equivalent area of a 10-meter elevation model. One-half-meter color imagery from the National High Altitude Photography program is actually 12 times larger than the equivalent area in 1-meter black-and-white images. Vector data collected at 1 meter between points could be 10 times larger than when collected using the 1:24,000 standard of 40 feet. For land management agencies, where GIS data represents broad expanses of administrative territory, the increased need for disk storage is huge.

Core processing memory and hardware capabilities requirements have also greatly increased. Increased requirements create problems when the size of the data exceeds the size of the maximum addressable memory space. You may have noticed this problem in vector functions such as overlay, dissolve, and union. Increased coordinate and pixel density also slows down response time and clogs up networks. Obviously, computer capabilities will need to keep pace!

|

Figure 5

Data from the National Elevation Dataset displayed against a hillshade of lidar bare earth at 1:2,400. Notice many errors, discrepancies, and omissions. |

There may be a rise in data service providers and the technology to support them: you may get your data from a third party via the Internet. New equipment and data management strategies will be needed to process such intense data.

New technologies can help us make the transition to the "new GIS data." We should be looking to cloud computing services. In simple terms, cloud computing is nothing more than Internet-based data or computer services that provide specific products to GIS. When you use ArcGIS Online, you are using cloud computing. However, services could provide lidar data or processing services to large groups of people, and this would allow smaller companies and groups to leverage the data while avoiding the expense of maintaining the in-house functionality.

Conclusion

As a GIS professional, I have spent most of my career striving to build and improve the accuracy and precision of GIS databases as well as the overall data quality of the BLM's information. The advent of higher accuracy and precision data is great news! For new GIS professionals, a tremendous and exciting time lies ahead as they begin building a new geographic foundation for the world.

Do not despair over small details and technical problems; they have a way of solving themselves over time. The bigger issue, of course, is how to use this new and better data to meet customers' needs. The current saying among GIS folks is to use the "best available" data. I am thinking a new mantra would be to use the "most appropriate" data.

About the Author

Jeffery S. Nighbert has been a geographer with the Bureau of Land Management for more than 30 years and is currently the senior technical specialist for GIS at the Oregon State office, located in Portland, Oregon. He has extensive experience in GIS and holds a master's degree in geography from the University of New Mexico.

|