TL;DR – We learned many lessons from swapping out our authentication system in Qt Maps SDK with the shared API used by the ArcGIS Maps SDKs for Swift, Kotlin, and Flutter. What looked like a targeted migration became a complex project involving technical, logistical, and usability challenges. By implementing the new authentication system in parallel alongside the legacy system, we were able to ship when we were ready, keep quality high, and de-risk the rollout.

Backstory

If you’ve been aware of ArcGIS Maps SDKs for Native Apps (formerly ArcGIS Runtime) for long enough, you’ve likely heard us talk about our shared C++ Core (aka Core or Runtime Core); a single engine that powers multiple SDKs including Native Maps SDKs for .NET, Qt, Swift, Kotlin, and Flutter. Features are designed and implemented in Core, and each SDK team wraps Core to expose APIs using the conventions from their respective framework. Following this pattern keeps features and quality consistent while avoiding duplicated work. This architecture isn’t without challenges, but it’s been our secret sauce over the years for bringing ArcGIS to so many different platforms and frameworks, such as our recent roll-out of the brand new Flutter Maps SDK.

While the Core-first architecture works for most features, there are exceptions where an SDK team builds its own functionality outside of Core. Sometimes there are technical reasons, other times it’s simply historical or resourcing related. Nevertheless, authentication was historically one of those outliers. That changed in 2023 with the arrival of the new Native Maps SDKs for Swift and Kotlin. Faced with the prospect of writing two separate implementations for the new products, the group made a strategic decision: invest in a single, shared authentication API in Core. Not long after, the Native Maps SDK for Flutter joined the lineup. Thanks to the new Core API, it spun up its authentication system quickly, validating the decision.

Meanwhile, Qt Maps SDK had been using its same authentication system for nearly a decade. While it did the job, it was becoming challenging to add new features and fix bugs. Observing the successes of the Core innovations from the sidelines, our Qt team decided to align with this new pattern. This alignment would modernize our supported environments and workflows, reduce maintenance, improve quality, and unify behavior across Native Maps SDKs.

Now that you’ve seen how the Native Maps SDKs team values code reuse and support for many platforms, let’s zoom into the Qt Maps SDK team and see how we migrated to a new authentication system. We embraced many different techniques, from elegant object-oriented programming patterns to less-desirable, but pragmatic copy-paste efforts.

Setting the stage

We started planning in early 2024 with a major semantic release 300.0 on the horizon (targeting April 2026).

The goal: Implement and release the new Authentication API by version 300.0.

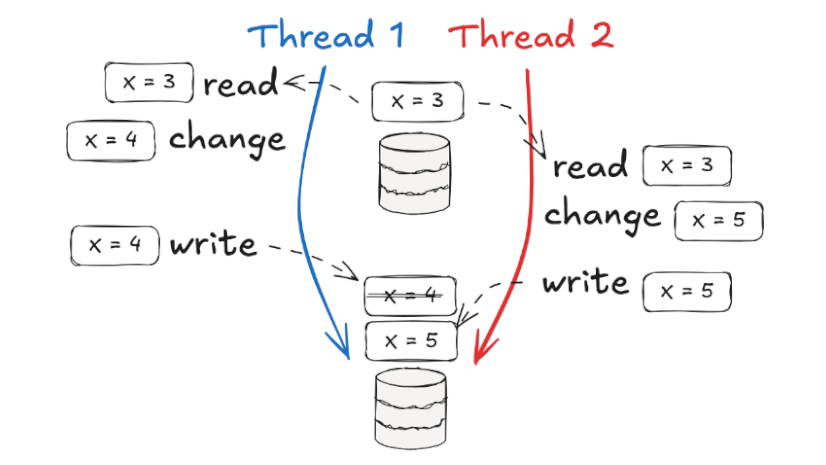

After an initial research spike and prototype, we quickly realized we could not make both systems work together, nor could we shim the old authentication system with the new system under the hood. While it would be great to avoid breaking changes for users, the two systems were too different, and we would regret merging the systems in the long run. We could, however, have both systems exist side-by-side, allowing users to opt into the system of their choosing.

With that initial information in place, we settled on the most important decision of the project: to build and release the new system alongside the legacy system. We then set forth the following principles:

- Functional equivalence is critical – The new API must match legacy capabilities before it can be considered a viable replacement.

- Don’t compromise the long-term vision – The new API was our chance to reset. Temporary inconveniences, old behaviors, and historical oddities should not influence the new API.

- Smooth migration path for users – Accepting that there would not be API compatibility, there must be a clear and simple upgrade path for users.

- Plan for a clean removal – The new API would eventually replace the old API. Therefore we should have no shared dependencies that entangle the two systems.

- Quality is nonnegotiable – Both systems must be production ready during the transition.

With this vision in mind, we knew we could release the new API in phases and ultimately deprecate and remove the legacy API in version 300.0.

Mapping the road ahead

After reviewing the designs and scope of work from a high level, we sketched out an initial roadmap:

On paper, it was a clean, iterative plan. However, once we got started, the challenges started to amass.

Challenges

Challenge #1 – Naming conflicts

We wanted to keep canonical names (e.g., AuthenticationManager) in the new API. However, preserving names clashed with identically named types in the legacy API. C++ offers namespaces to scope and disambiguate classes, but our C++ API had always lived in a single namespace: Esri::ArcGISRuntime

Solution: introduce a dedicated Authentication namespace: Esri::ArcGISRuntime::Authentication

Simple, right? Unfortunately, not, and we broke our builds on Windows. How? Qt uses a concept called the Meta Object Compiler (MOC), to provide additional functionality not available in the C++ language by default (like signals and slots for events). The MOC runs as a preprocessing step of the build and generates additional files for each class with “moc_” as a prefix. For example, AuthenticationManager.cpp would generate moc_AuthenticationManager.cpp. Next, the C++ compilation and linker steps execute, which then attempt to combine the compiled object files. Due to platform idiosyncrasies with the MOC on Windows, we ended up with duplicate symbols and a broken build, even though they were in different namespaces. We eventually worked through it with some clever build tricks. It was a good reminder that even “simple” naming decisions can spiral into unexpected technical challenges.

Challenge #2 – Preventing mixed use

The legacy and new Authentication systems were designed to work independently. Importantly, developers must specify the desired system before the first network request goes out. If you intermix the two systems, unexpected bad things will happen. Unfortunately, early testing of our prototype showed that it was too easy to intermix the systems, even though we knew not to! Therefore, we decided we needed to improve the usability by adding two guardrails:

- Folder separation – All headers for the new system would live inside an Authentication subfolder within our SDK’s include directory. This guardrail gave developers a clear visual cue when adding classes to their projects, reinforcing the separation.

// Legacy

#include “AuthenticationManager.h”

// New (Core-backed)

#include “Authentication/AuthenticationManager.h” - Build-time header guards – We added build-time checks that would break your build if you tried to mix the two systems. For example:

// New System

#define NEW_AUTH 1

#if defined(LEGACY_AUTH) && !defined(DISABLE_AUTH_MIXED_USE_ERROR)

#error("Cannot mix legacy and new Authentication systems. Define DISABLE_AUTH_MIXED_USE_ERROR to disable this error."))

#endif

// Legacy System

#define LEGACY_AUTH 1

#if defined(NEW_AUTH) && !defined(DISABLE_AUTH_MIXED_USE_ERROR)

#error("Cannot mix legacy and new Authentication systems. Define DISABLE_AUTH_MIXED_USE_ERROR to disable this error."))

#endif

We considered runtime logging, but misuse often occurs before the first network request, so network logging could not catch this reliably. Compile time checks were reliable and effective, so we stuck with that.

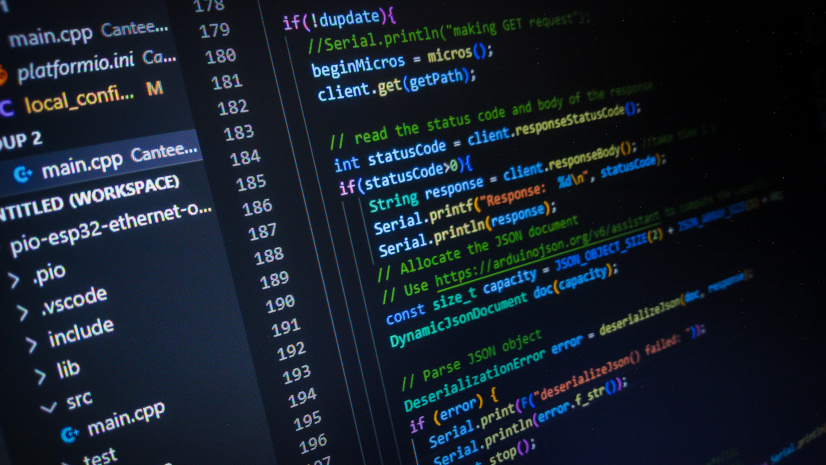

Challenge #3 – Refactoring the networking stack

The naming and usability issues we tackled early on were important, but they were relatively contained. The biggest challenge that truly reshaped the project was realizing that the new authentication system wasn’t just an authentication overhaul. It also introduced a new HTTP network request pattern.

Previously, every individual object in our API would make its own network requests by connecting to a Core callback called RequestRequired. The callback from Core would fire when the API needed to make a request, and the API would issue the request and send the response back to Core. However, in the new system, all network calls would go through a single, stateless callback, referred to as the Global Request Handler. This new pattern was a major paradigm shift, and it fundamentally changed the way networking worked in Qt Maps SDK. We not only had to build new networking internals to handle the Global Request Handler, but we also needed to make the rest of the API adapt to support both the per-object and global request patterns. To accomplish this, we employed the Strategy Pattern, which allowed us to allocate the appropriate request system at runtime.

In addition to supporting the two different request patterns, we now had lots of public API deprecations to make. Anywhere you could explicitly set a Credential object on a class would now be deprecated. This pattern could only be supported with the per-object request mechanism, so it would soon be obsolete.

But, our problems didn’t end there! Our Portal API was mostly implemented at the API level. The strategy we put in place to support both the per-object and global request mechanisms worked for Core-backed features, but not for API-level features. We spoke with our colleagues and learned that there was an internal class designed for this scenario called ClientRequestSender. This class allowed us to ask Core to issue a network request on our behalf, which would then get routed through the Global Request Handler, ensuring API level requests would follow the same path as Core requests. Unfortunately, it also meant we needed to add extra logic for our entire Portal API. We ended up solving this through inheritance and polymorphism; each Portal class would have a subclass to handle the new client request pattern in an overridden virtual method.

// pre 200.8 implementation

class PortalGroupImpl

{

public:

void fetchGroupUsers()

{

// make the request

m_networkManager->sendRequest(...);

};

} // new implementation (for legacy system)

class PortalGroupImpl

{

public:

virtual void fetchGroupUsers()

{

// make the request on our own

m_networkManager->sendRequest(...);

};

}

// new implementation (for new system)

class CorePortalGroupImpl : PortalGroupImpl

{

public:

void fetchGroupUsers() override

{

// Ask core to issue a request for us

ClientRequestSender::sendRequestAsync(...);

};

} As you can see, what started as a targeted authentication overhaul quickly became a full-blown networking stack refactor.

Challenge #4 – Testing coverage

Top-notch quality was a non-negotiable goal for us from the beginning. For the most part, things were going well initially, as we easily followed along the test designs written by the teams that had come before us. Our testing woes began once we fully understood the impact of the Global Request Handler and Client Request Sender mentioned earlier. Suddenly, we couldn’t just add new test cases for the new authentication classes we were introducing. Now, every class that issued network requests, whether it used authentication or not, had two completely different code paths. That meant we needed to double the coverage in our testing.

We faced a choice:

- Option A: Get clever – Add hooks and conditionals into our tests and test runner so tests could run in both modes.

- Option B: Duplicate – Copy and paste the tests, creating separate files for each mode.

Against the team’s initial instincts, we went with Option B for the following reasons:

- The test name, code, and results in the Jenkins reports had clear expectations. This would simplify the daily task of monitoring test results and triaging failures.

- Some tests needed slight code modifications, which would require a mess of #ifdefs littered in each test case if we didn’t duplicate.

- Duplicating would make it trivial to remove legacy tests later.

Yes, duplication is often a code smell, but the tests were clearer, more predictable, and more maintainable. The decision was pragmatic, and knowing that we only needed to live with the duplication for a few months made it more palatable.

Challenge #5 – Platform-specific issues

In the Native Maps SDKs group, we share more than just a common Core and API design. We also have shared test designs that specify test steps and data sources for all teams to implement. Unfortunately, Native Maps SDKs for Swift, Kotlin, and Flutter only run on iOS, Android, and macOS, while Qt Maps SDK also runs on Windows and Linux. That difference ended up being significant, as our new Integrated Windows Authentication (IWA) tests were all failing right out of the gate for Qt, but only when run on Windows.

The problem? IWA can be configured in two modes:

- NTLM (NT LAN Manager) – An older challenge/response protocol.

- Kerberos – A more modern Single Sign-On experience.

Both modes use the same credentials you use to log into Windows, but they behave differently. If our tests ran against a Portal configured with IWA Kerberos, no challenges would be issued, yet our test assertions expected them.

This mismatch didn’t just break our tests’ expectations; it broke our implementation. Up until now, the entire design was based on a challenge/response workflow. For example, we would issue a request to the server, the response would indicate that authentication was required, and we’d forward the challenge on to the developer to handle. However, with Kerberos on Windows, Qt’s network manager would see the WWW-Authenticate: Negotiate HTTP response header and automatically send the domain credentials back. As a result, our Qt Maps SDK doesn’t even know a challenge or any authentication has taken place. Working through this workflow difference took a lot of coordination with the .NET team and our authentication experts to determine the right approach, but eventually, we found a solution. Once we got our implementation working, we reworked the test designs to clearly indicate the differing expectations on Windows.

This unexpected roadblock was an example how platform diversity can introduce subtle, but significant, technical challenges.

Lessons learned

1. Parallel implementations were the right call

Looking back, building the new system alongside the legacy system was absolutely the right decision for us. Here’s why:

Separation of concerns – The new system stayed pure, free from legacy baggage. The legacy system stayed rock solid and unchanged, ensuring no new regressions.

Main branch integration – We could check the new API directly into the main branch, which meant:

- We got working software building daily, which is an important tenant of Agile software development.

- We avoided long-running branches that required constant merges to stay in sync.

- Other team members could start using the new API early, helping us gauge usability and stability.

- We could write and run daily automated tests from the start.

- If the API wasn’t ready by release time, then removing it would be straightforward.

This approach gave us flexibility, visibility, and confidence, without locking us into long running and isolated development.

2. Removing a foundational system is never trivial

Even with careful planning, removing the legacy system from our large codebase was still a labor-intensive process. We learned that when you rip out a system that’s been in your stack for over a decade, you will uncover oddities you never predicted, such as internal classes accessing the legacy API in unexpected ways.

Our takeaway:

- Do everything you can to make the removal simple.

- Accept that it will still be complex, messy, and full of surprises.

- Plan for extra time and patience, as it’s not just a “delete and replace” job.

3. Plan for the unknown unknowns

We did a risk assessment early on, but the number of unforeseen issues was still surprising, and they had a real impact on our deadlines.

For example, during 200.6, things were going well with the ArcGIS Authentication implementation, but we decided to play it safe and wait until 200.7 to go live. We believed that this would allow us to ensure the foundation that we laid for ArcGIS Authentication would work well for Network Authentication too. Unfortunately, in 200.7, we hit a wave of unexpected extra work (IWA on Windows issues, massive test duplication, and more).

In the end, we dedicated an entire release to cleaning up loose ends and didn’t go live with the new authentication system until 200.8.

Lesson learned: build in buffer time for the things you don’t know you’ll encounter.

4. Be pragmatic, but don’t compromise the end goal

Throughout the project, we faced technical challenges that could have derailed us.

For example:

- Name clashes – We stuck to our goal of keeping the API pure, even though it meant ugly build “fixes” and potential confusion from having two AuthenticationManager classes. Those pain points were temporary, but the benefits will last.

- Test duplication – Copy-pasting tests felt wrong, but it was simple, achievable, and temporary. Six months later, when we removed them, we were glad we’d taken this approach.

A philosophy we live by on the Qt team is be pragmatic. After this project, I think a better articulation of our philosophy is: Be pragmatic… but don’t compromise the end goal.

Conclusion

In the end, we delivered everything we wanted in 200.8, but some unexpected challenges hampered our ability to incrementally release features along the way like we initially hoped. The migration ended up being more than just a technical upgrade. It was a crash course in adaptability. We learned that:

- Even the best-laid plans will encounter unknown unknowns.

- Pragmatism is essential, but the long-term vision must remain intact.

- Parallel implementations can be a lifesaver when replacing a foundational system.

- Platform diversity can surface hidden complexities that only appear in real-world testing.

Most importantly, we were reminded that swapping out our authentication system isn’t just about writing code, it’s about managing complexity, making trade-offs, and keeping the team aligned with the project vision through uncertainty.

The new Authentication API in Qt Maps SDK is now cleaner, more maintainable, and better aligned with modern ArcGIS requirements. To learn more about the Authentication API in Qt Maps SDK, see the previous ArcGIS Blog that covers the user facing benefits, key differences between the legacy and new system, and suggested migration patterns. If you’re interested in working on challenging, multi-platform, large scale software projects like this, take a look at our careers site and join the team!