Geocoding is the process of converting addresses into geographic coordinates and is a common task in many data processing pipelines. ArcGIS GeoAnalytics Engine includes geocoding tools to take advantage of the scalability of Apache Spark to process large volumes of addresses and locations quickly. In this post, we’ll introduce a few tips and tricks on what to look for in your Spark cluster to improve geocoding performance using GeoAnalytics Engine.

#1 Partitioning: The Key to Improving Geocoding Performance

In Spark, data partitioning plays a crucial role in geocode performance. To understand why, it’s helpful to have some background on how Spark processes a job.

Spark Fundamentals

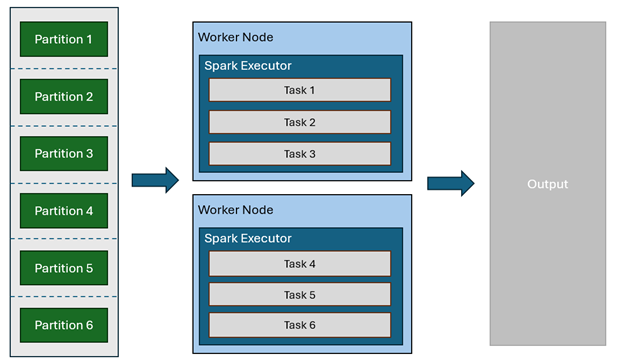

When Spark processes a job, it breaks the work into smaller units called tasks. A task is the execution of an operation on a single chunk of data—for example, geocoding a set of addresses. Each chunk of data is called a partition, which represents a subset of the input data—such as a portion of a CSV or Parquet file.

Spark distributes tasks across the worker nodes in a cluster. Each worker node runs processes called executors, which process tasks in parallel—typically one task per core. This means that partitioning determines the number of tasks and, in turn, the level of parallelism your cluster can achieve.

For more information on Spark architecture, see the Spark documentation about cluster architecture.

What this means for geocoding

Spark will try to optimally partition the input data, but we recommend checking and enforcing partitioning to ensure that there are 2-4 partitions per core in your cluster for the best geocoding performance.

How to Check and Change Partitions

- Checking Partitions: You can check the number of partitions in a DataFrame using the following code:

df.rdd.getNumPartitions()

- Repartitioning DataFrames: Repartitioning can help balance the workload more efficiently across executors and workers. If you want to optimize partitioning, you can repartition your DataFrame using the .repartition() method. You can use sparkContext.defaultParalleism to estimate a suitable number of partitions.

# Get the current number of partitions in the DataFrame

num_partitions = df.rdd.getNumPartitions()

# Get the number of available cores in the Spark cluster

available_cores = spark.sparkContext.defaultParallelism

# Check if the number of partitions is less than twice the available cores

if num_partitions < (available_cores * 2):

# Set the minimum target number of partitions to 2x the available cores

min_target_partitions = available_cores *2

# Repartition the DataFrame to improve parallelism

df = df.repartition(min_target_partitions)

Warning: Available cores in an autoscaling cluster

Spark has built-in mechanisms for adjusting the number of executors and parallelism during a job. However, keep in mind that autoscaling may not always result in the most efficient partitioning strategy. Default parallelism is often set to the number of cores available across all executors when the spark session is initialized.

For example, if you have an autoscaling cluster started with 3 nodes and later auto-scaled to 10 nodes, spark.sparkContext.defaultParalleism will only return the number of cores available within the 3 nodes when Spark application was started.

To avoid this, we recommend repartitioning your input data to 2–4x the number of cores in the cluster when fully scaled. While this might introduce slight overhead when not scaled up, it ensures efficient resource utilization as the cluster reaches maximum capacity.

For instance, in the example above with a maximum capacity of 10 worker nodes, 40 cores in total (each node has 4 cores), you should at least repartition the input DataFrame to 80 (2*10 worker node*4 cores/node).

#2 Locator Proximity: Keeping Data Local

When working with geocoding in GeoAnalytics Engine, keeping locator files co-located with the Spark cluster is another performance factor to keep in mind. Ideally, geocoding tools should operate with locators stored locally, either on disk or in memory, to avoid the performance penalty of network calls.

- Copy locator files from Cloud storage to locally on disk using init scripts: You can copy the locator files to each worker node in your Spark cluster using an init script. This needs to be done at the creation step of your Spark cluster.

- addFile: You can also leverage the sc.addFile function to add the locator file to each worker after Spark is initialized.

For more detailed examples, see the GeoAnalytics documentation on Locator and Network Dataset Setup.

#3 Worker Memory Size

Another key factor in improving geocoding performance is optimizing the worker memory size relative to the size of the locator file. Since reading from the locator is often the bottleneck for a geocode operation, GeoAnalytics Engine tries to load the locator into memory using a process called memory mapping. This speeds up the geocode because it is much faster to read from memory than from disk.

To ensure that the gains from this optimization are possible, it is important that the worker node has enough available memory to store the locator.

Suggested Memory Formula

For each worker, the recommended minimum memory size for geocoding operations is:

Recommended Minimum Memory = Locator Size + (Locator Size * Number of Cores on each Worker / 20)

For example, on Azure Databricks using the ArcGIS StreetMap Premium North America Locator (which is around 20gb), you should choose a 4 cores worker node with at least 24gb memory.

Other performance considerations

In addition to tuning your Spark environment, it is important to also consider the impact of the cleanliness of your address data, the extent of your analysis, as well as the level of resolution needed for the geocodes.

Address cleanliness. You will likely see a slowdown in performance with address strings that include misspellings, missing information (e.g., no street directionality like “northeast”, or no street name suffix like “road” or “street” or “blvd”), or use of non-standard abbreviations for street name, state, or country, etc.

Extent of analysis. When selecting a locator, make sure its spatial extent covers that of your input addresses. For example, if your dataset primarily contains addresses in Boston, it’s best to use a locator focused on that region. For example a city- or state-scaled locator.

In some cases, using a locator with a broader spatial extent will be needed —such as a U.S.-wide locator for a dataset mostly centered in Boston but containing a few outliers addresses elsewhere along the East Coast. However, keep in mind that using a locator with a significantly larger spatial extent than necessary may increase processing time, as it takes longer to search through the larger locator file to find matches.

Level of resolution. Geocoding speed will generally be quicker for more generalized locations, such as returning a geocoded point location at the city or postal code level, versus at the address / parcel level.

Input Data Formats. Consider storing large-volume string type addresses in columnar storage formats such as Parquet and ORC, as they support efficient compression and predicate pushdown, and generally offer significantly faster read performance compared to row-based formats like CSV or JSON. Additionally, large uncompressed text files can consume excessive network and disk resources, leading to increased I/O overhead and slower processing in Spark.

Conclusion

Improving geocoding performance in ArcGIS GeoAnalytics Engine requires a thoughtful approach to Spark configuration, data partitioning, memory management, and locator placement. Testing and iterating based on your data and infrastructure will yield the best results, but by applying the best practices outlined in this post— tuning partition counts, co-locating locator files, and ensuring sufficient memory on each worker—you can significantly enhance the speed and efficiency of your geocoding workflows. For instance, we tested a dataset of 125 million US building addresses based on the Microsoft building footprint dataset with various portioning schemes. Without repartitioning, the Geocode tool takes about 4.38 hours to finish on a cluster with 400 cores, and with repartitioning it only takes 2.87 hours to complete. This adjustment within the GeoAnalytics Engine environment makes a significant difference in performance.

We hope these details on geocoding have been helpful for your analytic workflows! We’re excited to hear about what you’re doing with Geocoding tools in GeoAnalytics Engine. Please feel free to provide feedback or ask questions in the comment section below.

Article Discussion: