Deep Learning has been a component of spatial analysis for Imagery & GIS recently. There are many computer vision tasks that can be accomplished with Deep Learning neural networks and Esri has developed tools that allow you to perform many tasks like image classification, object detection, semantic segmentation, and instance segmentation.

One of the latest enhancements to the capabilities is performing Image to Image Translation. Whether that is Super Resolution where you can enhance your low resolution imagery to High Res or using CycleGAN to translate Radar to Imagery.

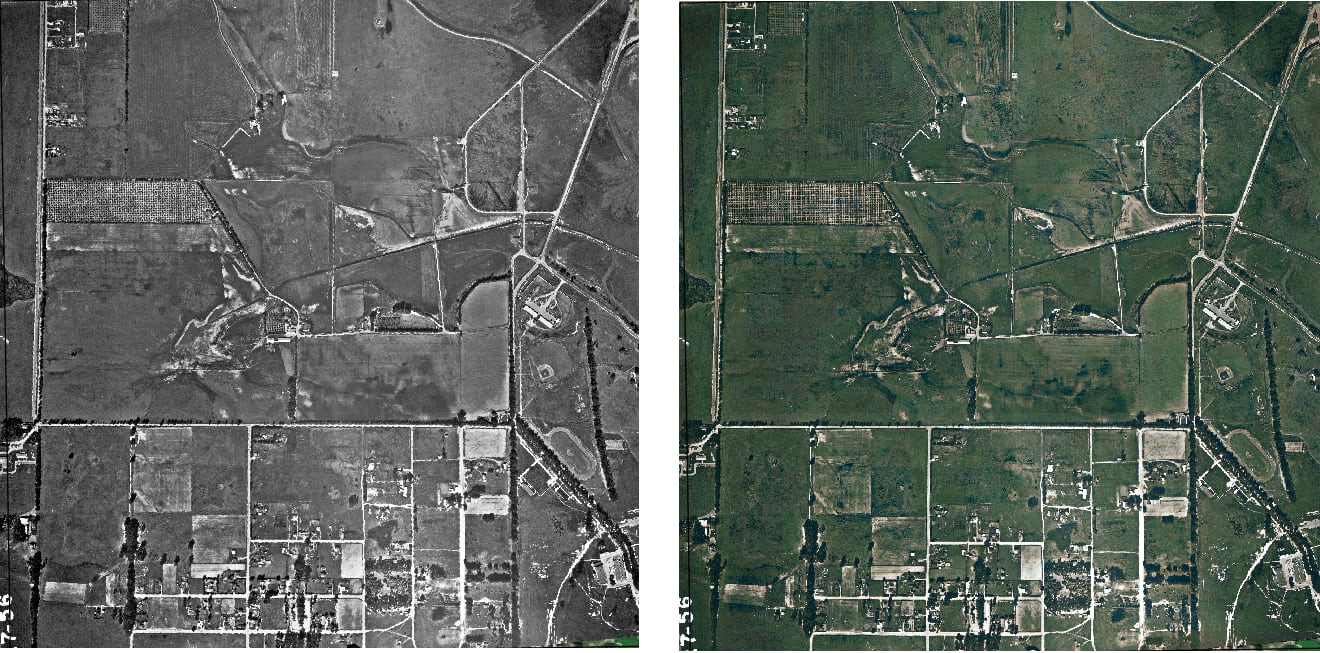

Here we will show how one can use Image Translation to colorize Black & White images.

Often community planners, urban designers and architects find a need to show historic and current images to demonstrate historic growth. Comparing black and white historic images with real time colored images to show change is often not so obvious to general public. Using Deep Learning , lets see if we can enhance the Imagery. For this we will use the Pix2Pix model.

Pix2Pix is a Generative Adversarial Network (GAN) model designed for Image-to-Image translation. You can learn more about the Pix2Pix architecture.

![Pix2Pix model architecture[Pix2Pix model architecture [https://arxiv.org/pdf/1905.02200.pdf] Pix2Pix model architecture[Pix2Pix model architecture [https://arxiv.org/pdf/1905.02200.pdf]](https://www.esri.com/arcgis-blog/wp-content/uploads/2021/08/Pix2Pix-Model-Architecture.jpg)

Data Preparation

In Deep Learning projects, one of the most time consuming tasks is to prepare data labels. But for Pix2Pix (and all other Image-to-Image) translation models all you need is a pair of images. For our case we used a pair of B&W and Color images. Because for this project, we did not have a B&W image, we simply used one of the bands of our 3-Band color image as a B&W image.

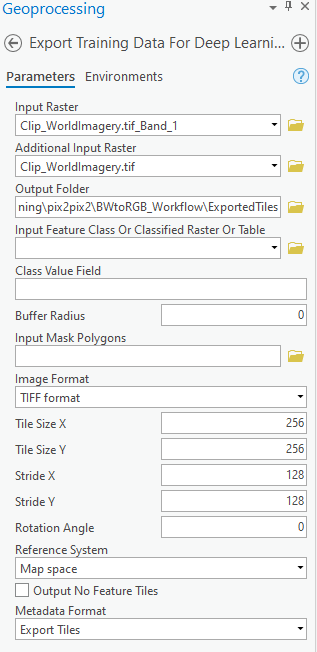

Exporting Training Sample

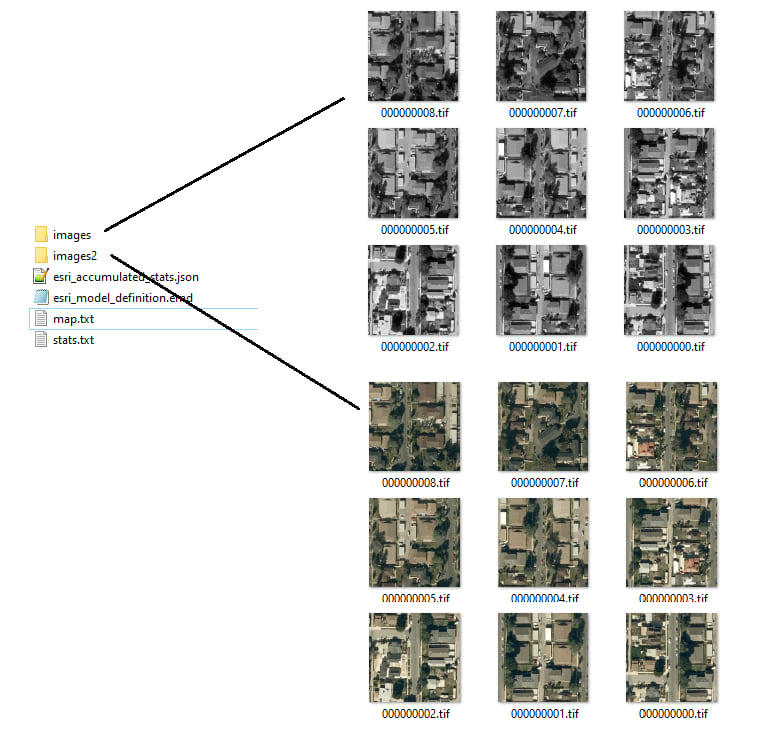

Next, the Export Training Data for Deep Learning geoprocessing tool is used to create training samples. In the tool, we used one of the bands of the color image, which is basically an 8-bit 1-band B&W raster as the Input Raster, the color RGB Image as the Additional Input Raster, and Export Tiles as Metadata format.

The output of this tool is two image folders and their corresponding metadata. The images folders contained B&W and Color image chips.

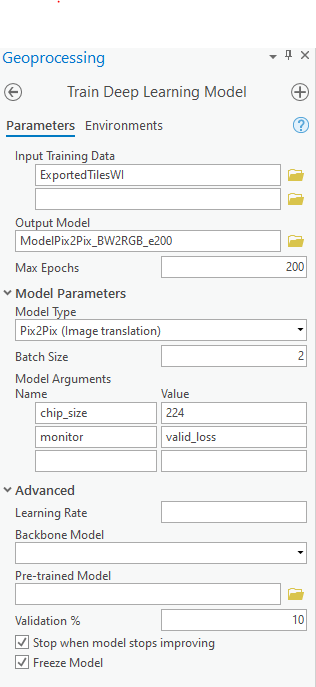

Train the Deep Learning model

We trained the Pix2Pix model using the Train Deep Learning Model tool in ArcGIS Pro or using the arcgis.learn module in ArcGIS API for Python. The input to the tool is the training data that was exported in the previous step. Though this step is fairly simple, it is time consuming. If you can use a Supported GPU, it will greatly help in reducing the processing time. For this case, we used a NVIDIA QUADRO RTX 4000 GPU and it trained our model for 200 epochs in about 9 hours.

The output is a trained model in the form of a Deep Learning package, containing an Esri Model Definition (EMD file), that can then be used to create colorized version of input B&W image.

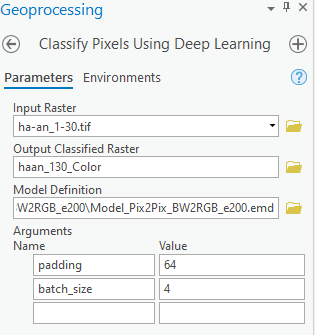

Use the model

We used the Classify Pixel Using Deep Learning tool to create color image from our B&W Image. We specified the input raster and the location to the trained model (EMD or dlpk file). Here is a screen-capture of the tool with the parameters used but really all we need to do is input the B&W image, model definition and specify a output location and a file name.

Result

Below are two images are showing input and output respectively.

Here we are comparing images from two periods (1956 vs 2021). Obviously, now that we have colorized image for 1956 as well , the audience will find it easier to understand development in the area.

Summary

As you can see , with Esri’s Deep Learning capabilities , using the numerous and ever growing model types available in ArcGIS Pro , you can now perform Image enhancements and classification for analysis to solve real world problems.

See these resources for information on Deep learning using ArcGIS:

- Deep learning in ArcGIS Pro

- Geospatial deep learning with arcgis.learn

- Deep learning models in arcgis.learn

- How Pix2Pix works?

Special thanks to UCSB Library Geospatial Collections for letting us use the B&W Aerial Image used in this blog.

Credit: ha-an_1-30.tif, digital photograph Flight HA-AN, frame 1-30, UCSB Library Geospatial Collections accessed 8 August 2021, <https://mil.library.ucsb.edu/ap_images/ha-an/ha-an_1-30.tif [mil.library.ucsb.edu]>

Article Discussion: