April 18, 2019 |

March 5, 2020

Over 2.2 billion people are blind or visually impaired, according to a recent report by the World Health Organization. That number is growing due to factors such as staring at digital screens and spending time indoors, which can lead to nearsightedness. Cases of diabetes are also rising, with impaired vision as one potential side effect.

Many affected by visual impairment can’t get the care they need, experience challenges with safe navigation, and desire greater independence. Now, technology is helping reveal the world around them in ways beyond visual perception.

Descriptive Text for Mental Maps

How we move about is essentially a spatial problem. We perceive the distance and direction in which we want to go and take steps to get there. We see a stair and lift our foot high enough to step onto it. We see the edges of a sidewalk and place ourselves in the middle of it as we walk.

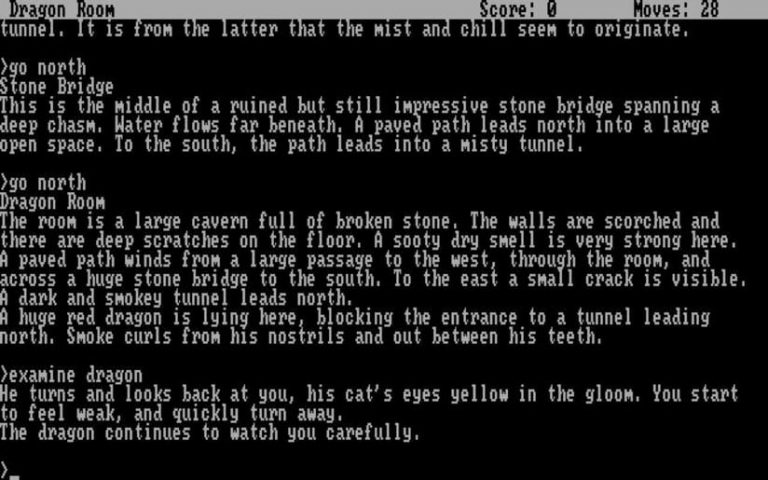

But how do you reveal spatial relationships to someone who can’t see them? One clue comes from vintage text adventure computer games that used words to describe a player’s virtual surroundings. These games outline what’s to the north, south, east, and west of a player’s position. Players would use the information to build a mental map and navigate the mazes of an invisible world.

If this sort of descriptive information were read aloud or communicated via a tactile language (such as braille) to someone with a visual impairment, it would paint a similar picture of the space around that individual.

But stakes are much higher in real life. There is little margin for error for someone navigating busy city streets, for example. All information must be complete, accurate, detailed, accessible, and up-to-date.

A Repository of Spatial Knowledge

There are all types of information that blind or partially sighted people might want about the world around them. They might just need to know how to get from point A to point B. Or they may need to know a bus route timetable. They might want historical background information on the building they’re in front of or its opening hours. This information has something in common: it references specific locations, the connections between them, or details about those locations.

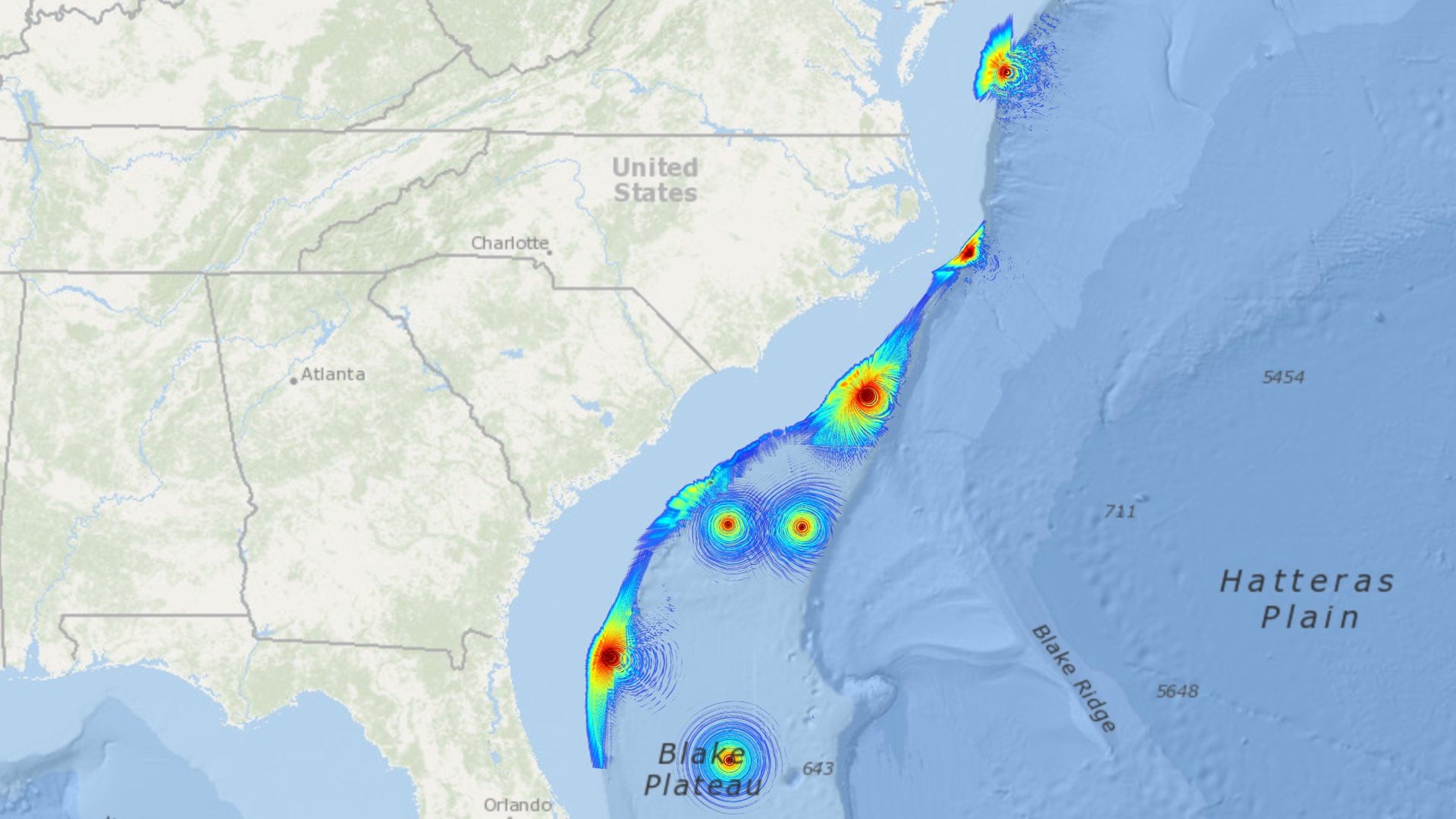

Huge amounts of location information can be stored in a geographic information system (GIS), a specialized repository that can hold all the data that people with visual impairments might need. Within a GIS for a city, for example, you might find labeled points of interest such as bus stops. You might also find information about when the bus will stop at each station. You could also find out what points of interest are within walking distance of the station. GIS can house different types of data as well, such as coordinates or numbers (like the distance between you and your destination) or descriptive text (like in the computer game example). And now that more and more objects are connected to the Internet of Things, GIS can house spatial information about those as well—in real time.

Thanks to improvements in GPS, GIS can deliver the accuracy needed to navigate outdoors in real time. GIS can also interface with artificial intelligence (AI) and machine learning applications to perform tasks like object detection.

So, the possibility emerges for a person to use a visual receptor like a camera lens to “see” for them. For sighted people, the brain combines views from each eye to reveal depth and allow depth perception and 3D visualization. Two camera lenses placed slightly apart on the same assistive device could take simultaneous images, mimicking eyesight. Analysis of the difference between the two images could help calculate the actual distance to an object. Machine learning algorithms could identify an object in real time and then relay that information back. The GIS could place that information in the context of location.

In this way, technology turns location into a mobile window to the world and its information.

Interfaces: Separating Signal from Noise

To put all this technology to work to really serve a visually impaired person requires answering two important questions: What information is most useful? And what is the best way to communicate it?

When navigating without sight, all available information is not necessary and it’s certainly not needed all at once. Receiving all possible inputs would be overwhelming. Information delivery has to be functional.

GIS is helpful in this regard, allowing self-selected access to any information but not showing all of it at the same time. Developers can build apps that interface with a GIS to meet specific needs. Users could zoom in to an exact location and find out what’s around and zoom out to learn anything else they need to know.

As technology develops, it advances the ways we can communicate information. Voice-to-text interfaces, for instance, are already built into many phones and mobile devices. Some organizations have already created navigation apps for people with reduced vision. Accessible website interfaces allow people with screen readers to navigate and explore online.

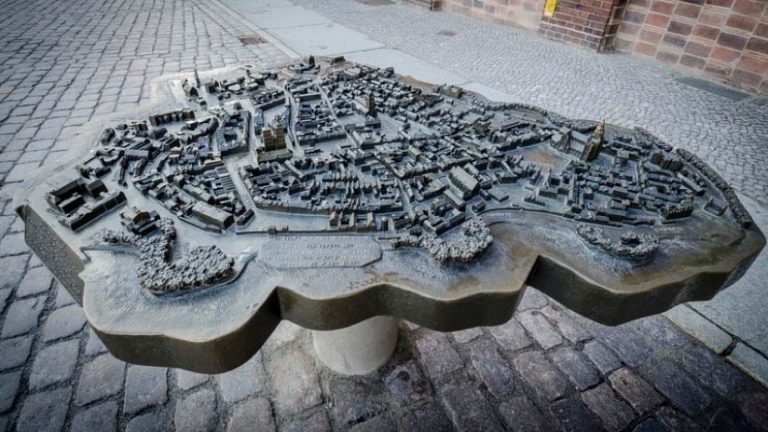

For people with blindness, sound is often an important input. They may already be attuned to the soundscapes around them, using that feedback to navigate and experience their surroundings. So, navigation instructions or descriptions delivered audibly could interfere with this sensory information gathering. In response to this concern, developers are exploring other ways of communicating by using haptic technology, which applies forces, vibration, or motion. For example, one vibration could signal to turn left, while two vibrations signal to turn right. Haptic maps provide tactile representations of areas or objects. People can touch and review these maps ahead of time to help memorize a route, or they can carry them along for reference.

Seeing in the Future

Developers around the world are already tapping into GIS to reveal visual information in alternate ways.

Educators in a town in Austria spent about three years collecting data to help people with visual impairments navigate its streets. The team gathered details about sidewalks, fences, mailboxes, and lampposts. They added that information to a GIS and developed an app that delivered it to those who needed it.

Advances and trials in indoor mapping are also exciting for people with visual impairments. Indoor mapping should one day help them not only arrive at a destination but be confident while navigating inside it.

Humanity’s power to assist blind and partially sighted people will only increase as we add more and more helpful datasets to repositories like GIS. Usability will improve as we continue to refine the interfaces we use to convey that knowledge. We can look forward to a future where people with visual impairments and blindness can experience greater safety, independence, and quality of life.

April 18, 2019 |

January 7, 2020 |

February 13, 2020 |