One of the tasks that artificial intelligence excels at is Object Detection. This computer vision technique allows us to identify and locate objects within images and videos. It is an invaluable tool for mapping our world, creating digital twins, and gaining a quantitative understanding of our environment. These, in turn, fuel data-driven decisions for smart cities, urban planning, resource management, environment protection, and disaster response.

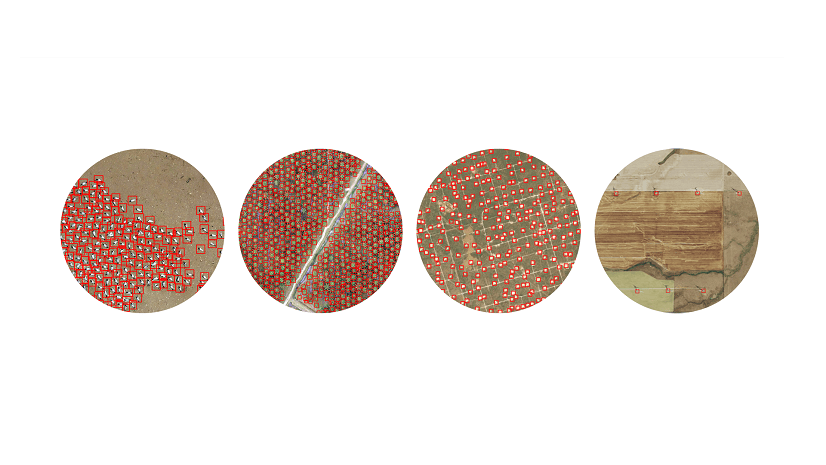

Orthoimages are often used for object detection, but they are not always the best because of the following reasons:

- Limited details: Orthoimages can make objects like fire hydrants and poles appear as dots or circles. However, oblique images captured from drones or street-view cameras provide more details of the objects, making them easier to detect.

- Blind spots: Tree canopies in orthoimages can completely conceal objects. The left image shows the location of an asset that we cannot see because of the tree canopies. The right image shows the same asset in a picture taken from the street.

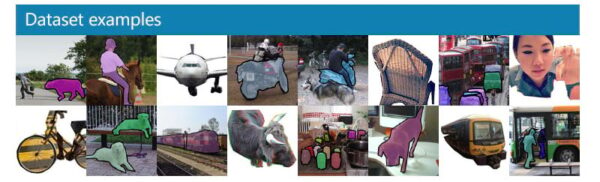

- Limited models and datasets: Open-source training datasets such as COCO (Common Objects in Context) dataset and pre-trained models are predominantly available for non-ortho images. They do not work as well on orthoimages.

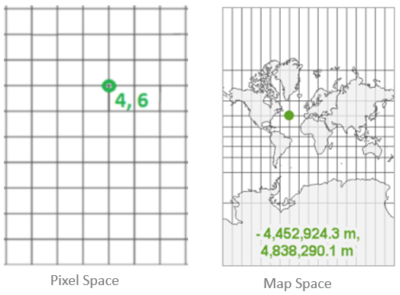

One solution to these challenges is utilizing oblique imagery from drones or street-view cameras. We can detect the objects in the Pixel Space of the oblique images and transform the detections to the Map Space. Map space utilizes a map-based coordinate reference system, while Pixel Space references the raw image space without rotations or distortions. When a model with Pixel Space reference system is used with an orthorectified image collection that contains images with camera information, first inferencing happens in Pixel Space and then the inferenced geometries are transformed to Map Space using the frame and camera information.

In this blog, we will use drone images taken from a new commercial development called the Packing House district, located in Redlands, California. We will build a model to detect parked cars. It can potentially help the decision makers understand the traffic in this new development. Our goal is to offer a workflow template and showcase its application through an example.

We need the following two for our workflow:

- A model that is trained to work with drone images. The model should use Pixel Space as a coordinate reference system.

- An orthorectified image collection that contains drone images with camera information including focal length and other sensor characteristics. GPS accuracy is important, include if available.

Now let us look at the steps.

Step 1: Build a model

Build the model in three steps (visual guide in video):

- Label images: label using the Label Objects for Deep Learning pane with Image Collection and Pixel Space options.

- Export image chips: next, use Export Training Data for Deep Learning tool with Reference System set to Pixel Space to export out the image chips.

- Train model: finally, use Train Deep Learning Model tool. Output model will have “ImageSpaceUsed”: “PIXEL_SPACE” in Esri Model Definition (emd) file.

Step 2: Create an orthorectified image collection

Create an orthorectified image collection in three steps (visual guide in video):

- Create workspace: use New Ortho Mapping Workspace wizard.

- Block Adjust: next, use tools in the Adjust and Refine groups to calculate the orientation of each image. If available, add GCPs and Tie Points.

- Limit duplicate Images: use Compute Candidate Items tool to identify the image candidates that best represent the mosaic area.

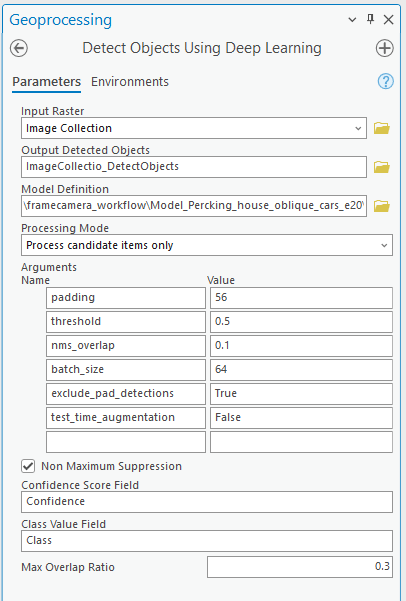

Steps 3: Detect Objects

Use the Detect Objects Using Deep Learning tool with the model and the image collection as inputs and the Processing Mode parameter set to the Process candidate items only and run the tool. This processing mode will make sure that detections first happen in the raw Pixels Space of each image, and then they are transformed into to Map Space of the Image Collection.

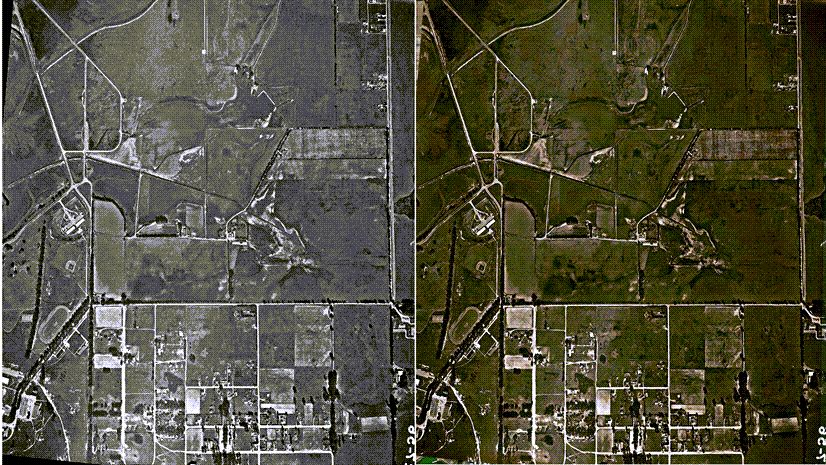

For a direct Pixel Space vs. Pixel Space-to-Map Space detection comparison, we ran the tool on both: a single drone image (Pixel Space) and the image collection (Pixel Space-to-Map Space).

Conclusion

In the blog, you gained knowledge about the benefits of oblique images in improving object detection. You explored the steps using a drone image set; however, you can apply these steps and concepts to images captured by Street-view cameras. Additionally, the workflow can be adapted for pixel classification tasks.

Did you find this blog insightful? We would love to hear your thoughts in the comments.

Article Discussion: