There’s a lot to love about ArcGIS Hub. It has beautifully simple layout tools that require no custom coding. It gets along with its map and app friends in the larger ArcGIS landscape. It can even help you manage volunteers, crowdsource information, and schedule community meetings. All you have to do to make a strong connection between your organization’s goals and ArcGIS Hub is bring your data.

ArcGIS Hub is first and foremost a tool that helps you use data to engage with your community. When you use data inside of a map or an app in ArcGIS Hub, you’re starting a conversation with your users. You’re basically saying “Here are some of the key facts about <insert issue you want to address>… let’s talk about this some more.” You can include maps to show where things are happening, surveys to solicit feedback, dashboards and charts to summarize data, videos and graphics to explain and capture someone’s attention, and much more. The best Hub sites pair a great layout with more than one of these tools.

With this wealth of tools for creating your interfaces and pages, it might seem like everything is straightforward. However, the core challenge of your relationship with ArcGIS Hub isn’t really about Hub or the many things you can do within it. The issue is your data. Where does your data live? How can you access it? What data should be shared to the public, versus what is protected by law or policy? You will want to consider all of these issues while you’re developing your long-term relationship with ArcGIS Hub.

Chances are that if you’re reading this blog, you already have an idea of the problem you want to solve or issue you want to tackle. You probably already know of some datasets that you could use to show how big a problem might be or to track progress solving it. The real questions you should be asking at this point are: “Where is my data stored?” and “How often do I need to see updates from it?” As we discuss data sources and how to integrate them into ArcGIS Hub, it’s important to focus on three topics: the type of data source, the frequency that source updates, and the best tool (or tools) to use to facilitate this process.

Data Sources

When we think of data sources we usually think of the main, original source system where data is created and/or updated, often by another department or system. This is typically called the “system of record” and is usually the best place to start from to ensure that your Hub data is as accurate as possible. If you’re migrating from an existing open data system into ArcGIS Hub, the other open data system is usually not the system of record… so search deeper until you can discover the original source. Data sources usually fall into one of three buckets (plus one special one):

|

File-based Storage | Static files like CSVs, shapefiles, text files or Excel spreadsheets, along with compound file-based formats like a file geodatabase format. These files could be exports from a system that are overwritten on a schedule and/or some kind of report that is generated. |

|

Databases | Continuously-updated data stored in structured tables using a relational database (SQL) format. This could be an enterprise geodatabase where the data is spatial (i.e. each record has some kind of location attached), a table from another business system with something like an address included in it (but not in a “spatial” format), or it could be just transactional records in a table with no associated geographic location at all. |

|

Data from APIs | APIs provide web-centric data storage that can be accessed programmatically using REST, XML, JSON, or other formats. Most APIs support functions like pagination, summarization or queries that allow you to specify which data you want to access, such as “all of the 311 calls from the past 24 hours.” |

|

ArcGIS Web Services | In many ways these services are identical to a generic API-based service, but ArcGIS services are a unique sub-type when it comes to Hub. Any web-based ArcGIS services that are published from ArcGIS Enterprise (including Server, Portal, GeoEvent) or ArcGIS Online (including hosted feature layers, ArcGIS Velocity, etc.) are, in theory, the easiest data storage format to incorporate into Hub. This means that the upcoming section on update cadence doesn’t fully apply… hold on… this will make more sense as you read further. |

Update Cadence

Getting data into ArcGIS Hub is important, but keeping that data updated is essential. For example, if you want to display the building permits for a city, there isn’t much value in only showing permits that were processed 142 days ago (or whenever you originally published your data). The question then becomes how often you need and want to keep your data fresh in ArcGIS Hub. Some of the most common update cadences we see are:

|

One-time | Data is made available in ArcGIS Hub once, in a static state with no intent to update this data. |

|

On-demand | An update process is only run as needed and not on a set schedule. |

|

Frequent | The update process is run on a set schedule that can be executed once a month/week/day or up to several times a day. |

|

Near real-time | The data is continually updated as frequently as possible or at a speed that closely matches the source system. |

What ArcGIS Hub does, and what it does not

Once you figure out the source of a dataset and the update cadence, you then have to determine what tool you want to use to extract, transfer, and load (ETL) data to make the data available inside of ArcGIS Hub. Before we explore this combination further, we need to understand a bit about how data “shows up” in Hub.

|

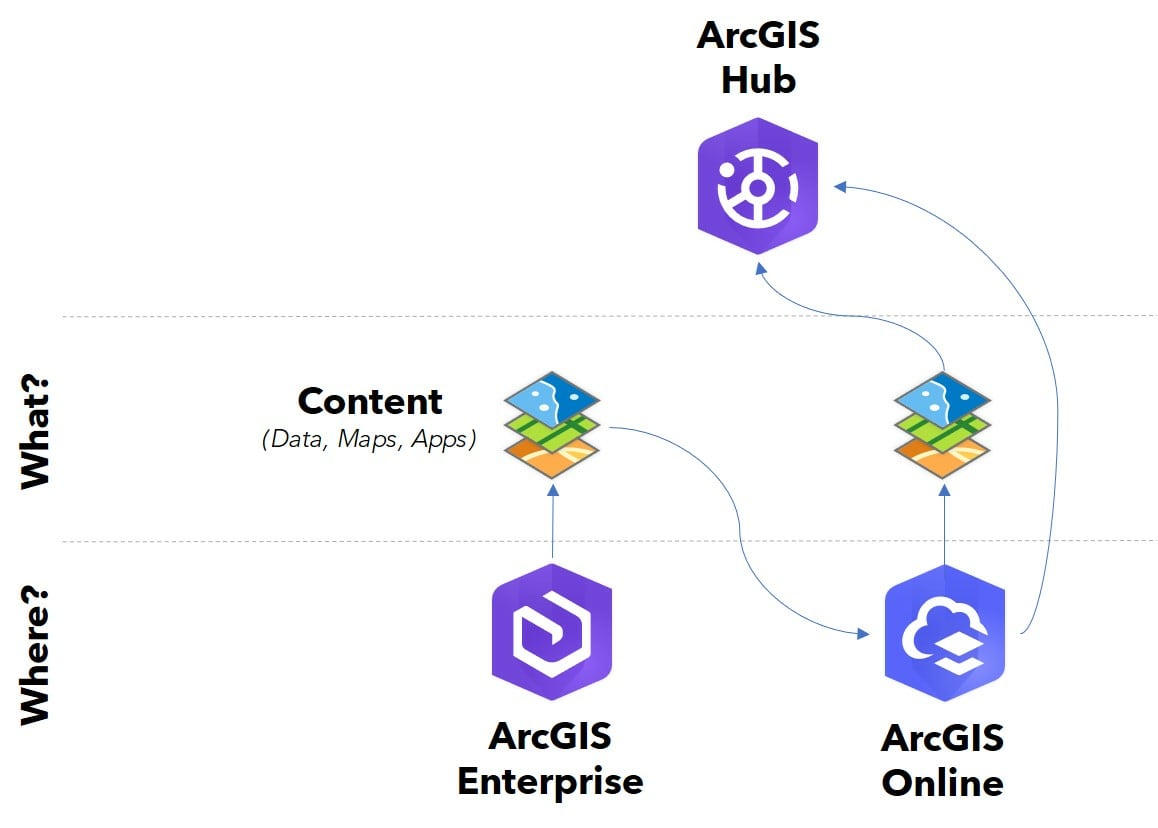

Hub doesn’t “store” data… it merely displays it. The data is actually stored in one of the supported sources – an ArcGIS Enterprise Map Service or Feature Layer or an ArcGIS Online hosted feature service. These services are then registered with ArcGIS Online, and shared to groups or to the public. Hub uses the concept of a “group” in ArcGIS Online to make content (data, maps, apps) visible in the Hub site of your choice. Remember earlier when I mentioned that ArcGIS Services are unique? Well, they are because they’re already in either ArcGIS Enterprise or ArcGIS Online. You just need to add them to the group your Hub site is using, and they will be discoverable in your site. All the other data types listed above must be ETL’d into either ArcGIS Enterprise or Online to be found and used in Hub. The choice now is what tool will best serve you.

Data Migration Workflows and Tools

It’s important to note that there are a lot of ETL tools in the world and most of them will work perfectly fine with most types of data. However, of this hardware store full of tools, I see only five being used consistently enough to recommend to users. Below you’ll find a list of the types of tools available to you, in order from simplest to most complex to use:

|

Using your Web Browser | Login to either ArcGIS Enterprise or ArcGIS Online and upload your file through the browser. It literally doesn’t get any simpler than this. There are some limitations on file types and such, but if you have a good understanding of what ArcGIS Online can do, you’ll get the idea very quickly. Usually non-GIS professionals can handle this with some ease and a little guidance. Think of this as your trusty hammer. |

|

ArcGIS Pro | Open ArcGIS Pro and publish your data to Portal or Online. This requires a little more understanding of GIS and ArcGIS Pro as a program, but still not terribly difficult unless you really want to go there. Think of this as a set of tools. |

|

Data Interoperability | Data Interoperability: This add-on extension works alongside ArcGIS Pro and provides a flow-chart-like way of building a repeatable ETL process. The workbooks you create can be saved and set to run on a schedule with Windows Task Scheduler or something similar. This is a little more complex but if you need to repeat a process and don’t want to touch it (and don’t want to learn Python), this is your tool. Think of this as a specialty saw that a carpenter might have… very powerful but relatively simple to use. |

|

ArcGIS API for Python | Do you know Python? Do you want to script your routine? If you answered yes to both of these questions and your ETL process is a little more complex or needs to be run very frequently, then this is the tool for you. Think of this as a whole set of really powerful tools that you can use. The ArcGIS API for Python can handle all of the publishing and data update processes, and Python has an extensive library of third party data connectors and conversion tools, making this an excellent resource for those that need a customized solution. |

|

Koop | Each of the previous tools is a one-time process, though they can also be scheduled. If you want something more real-time, like an ETL that converts data on the fly whenever a user requests the service then Koop is a great option. In simple terms, Koop is a JavaScript-based framework that allows you to build a service which translates data from some format on the fly into the REST API standard that ArcGIS Enterprise/Online uses. This new service would run on-demand and display whatever you’ve configured it to generate. Think of this as industrial-level tools that you might find being used to build an aircraft carrier. |

Combining Source and Cadence, then choosing a Tool

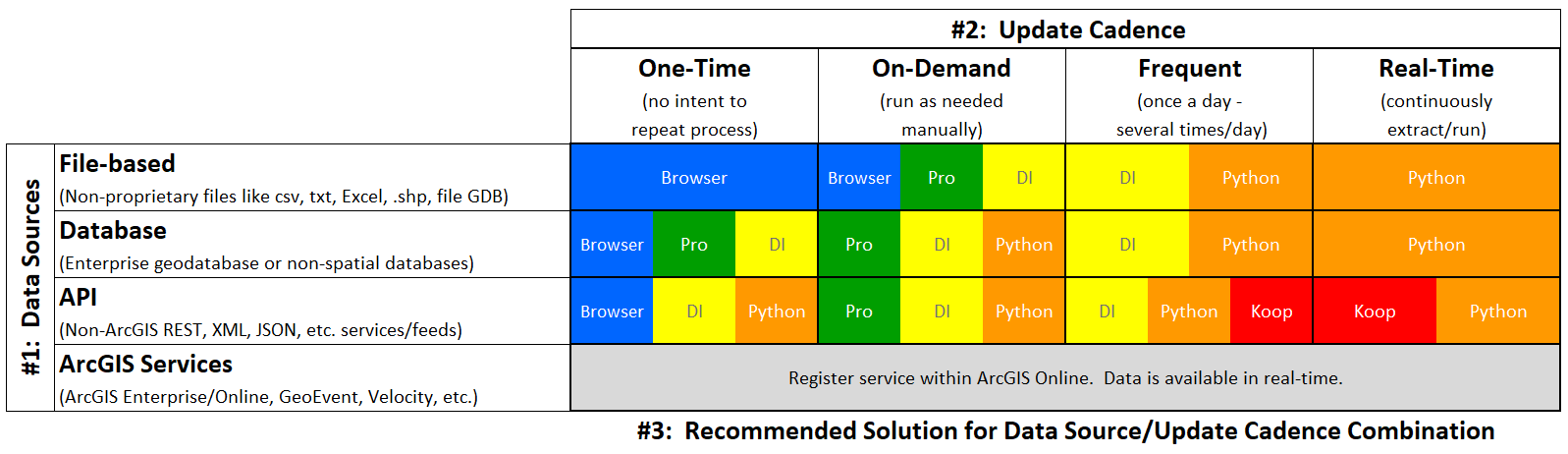

The combination of data source, update cadence, and your/your team’s technical ability will determine which tool you choose to use. For one-off uploads of static data in a simple format, the browser is probably your best option. On the other hand, if you have real-time data stored in a third party API, you should either use Koop or the ArcGIS API for Python. Below is a matrix that might help to provide some general guidance. Keep in mind that there are a lot of unique cases where these recommendations won’t be the most appropriate. This matrix covers the Data Sources on the Y Axis, the Update Cadence patterns on the X Axis, and in each grid is a listing of the options that may work well for that combination. The colors are mapped to the ETL tools themselves, listed below the table.

Examples

Here are a few hypothetical examples that might help make the matrix above easier to apply to your workflows.

Building Permits

The GIS team plans on building a Hub initiative that will inform the public about the permitting process and will also use a combination of heat maps, dashboards, and charts to show citizens where approved permits are being issued each month.

- Data Source: The business system that is used by the permitting staff has a SQL-based backend, but can create nightly “reports” in CSV that include the unique ID, address, issue date, type, status, and total fees of each permit. This means the team will use the “File-based” row in the matrix.

- Update Cadence: The GIS team also discovers from the permitting staff that in an average month, they process no more than 200 permits, and the status of an existing permit doesn’t change very quickly (permits are often valid for several months or up to a year). With an update speed this slow, the team could either use the “On-Demand” or “Frequent” column in the matrix.

By combining the File-based row and On-Demand/Frequent columns in the matrix the GIS team discovers that there are four possible tools that can be used. Of these four tools, only one appears in both columns and can save a workflow and make it repeatable: Data Interoperability.

Pothole Repairs by Street Centerline segments

The public works department of a city is interested in sharing the locations of pothole repairs over time. The team will use ArcGIS Hub to both share the pothole hotspots on major roads and help the public report new potholes that haven’t been fixed yet.

- Data Source: Pothole locations are recorded in the Public Works department’s work order management system. Each pothole is tied to a street segment from GIS using a unique segment ID number and reports are accessible in bulk through a SQL query of the work order system. This means that the team will need to use “Database” row in the matrix.

- Update Cadence: Pothole repairs are done throughout the workday but all field information is required to be completed by 5 pm each day. This means that the team will need to use the “Frequent” column in the matrix.

By combining the Database row and Frequent column in the matrix, the public works team discovers that they have two options: Data Interoperability or the ArcGIS API for Python. The public works team are not Python programmers and only need a tool that can join the pothole data to street centerline data from the GIS team’s enterprise geodatabase. In this case, they select Data Interoperability to help update the Hub initiative.

Fire Calls

A county wants to use 911 calls to their fire department to help the public understand where fires occur. Ultimately, the goal of their Hub initiative is to encourage residents in fire-prone areas to request and install more free smoke alarms using data from their dispatch system.

- Data Source: The county’s dispatch system captures 911 call time, incident address, and issue type through an API. This means we will use the “API” row in the matrix

- Update Cadence: While the data will primarily be aggregated in dashboards and maps, the fire chief wants the data shown to be “real-time.” This means we will use the “Real-time” column in the matrix

By combining the API row and Real Time column in the matrix the county discovers that the two suggested tools are either Koop or Python. The county plans on enriching the 911 call locations with other spatial data (like commission districts) and wants to store this result in a hosted feature layer. In this case, the county selects the ArcGIS API for Python as their ETL tool.

Final Thoughts

Migrating data into ArcGIS Hub is not a simple, one-size-fits-all approach. We think this is a good thing because it gives our users the ability to choose from a selection of Esri-supported tools that best meet their needs and skill level. Don’t like Python? Use Data Interoperability. Have a complex situation? Use a SQL statement embedded within a Python script. Either way, ArcGIS offers tools that fit your needs while also giving you some guidance (wear your safety glasses!) to make deploying them easy and fast. Here are a few things to keep in mind when you start down your Hub data migration project:

While I’ve talked quite a bit about “getting data into Hub” it goes without saying that ArcGIS Hub doesn’t actually store the data we’re talking about. The data storage happens either in ArcGIS Online or in ArcGIS Enterprise that can be hosted in your own environment. The concepts behind migrating data into ArcGIS apply to other technologies that rely on ArcGIS-formatted data services.

The matrix above shows a defined list of tools that can be used for ETL, when in reality, there are many situations where combinations of technologies are more appropriate. Enterprising groups in the larger ArcGIS user community are using and testing newer tools like Microsoft Power Automate, Azure functions, and more.

So, try some of these nuts and bolts techniques and handy ETL tools with your data, to build a closer connection between ArcGIS Hub and your organization. Stay tuned for more posts in this series where we will walk you through a few different data migration scenarios, including sample workflows/scripts and links to other helpful resources.

Hungry for more ArcGIS Hub content and learning?

- Attend webinars and meet-ups to discover how others are using ArcGIS Hub

- View ArcGIS Hub conference presentations, demos, and how-to videos

- Sign up for the ArcGIS Hub e-newsletter

- Follow us on Twitter @ArcGISHub

Article Discussion: